NEWS UPDATES (5)

Autonomous driving: Safety first

Self-driving vehicle technology has made significant advancements; now there needs to be an industry standard for self-driving safely. “Today there is no regulatory framework that would allow anyone to commercially deploy an automated vehicle. The challenge that we as an industry face is, ‘How can we prove that these vehicles are ready for the road?’” Jack Weast, Senior Principal Engineer, Intel.

Autonomous vehicles are programmed, responsive robotic systems, and as such they operate on three primary stages of functionality:

SENSING:

accurately perceiving the surrounding environment.PLANNING:

involving the decision-making process, when the artificial intelligence (AI) embedded within the vehicle recommends taking actions such as changing lanes, braking, or accelerating.ACTING:

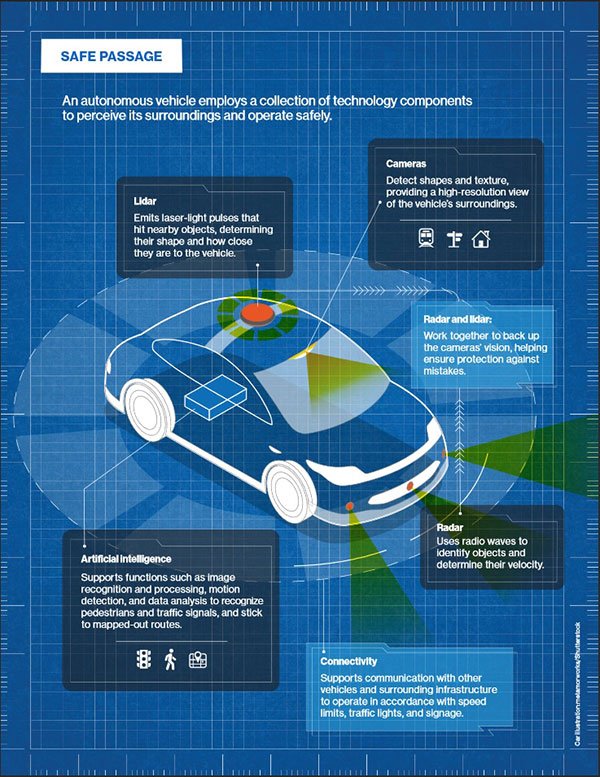

putting the recommendations to work to move and operate.The sensing system comprises onboard cameras, radar, and lidar, a radar-like laser imaging system. Long-range, 360-degree cameras, which can detect shapes and “texture”—for example, words on a sign—provide a high-resolution view of the autonomous vehicle’s surroundings. That level of definition helps the vehicle understand the semantics of the road, including lane markings, intersections, traffic lights, and where pedestrians are and what their likely next moves will be. Cameras then create a 3D image of the surrounding terrain, other traffic, obstacles, and speed and distance measurements.

The radar and lidar work together to provide backup for the camera’s sensing picture, helping provide safety and protection against mistakes. “Maybe for some reason the radar was confused by a metal plate in the road. No problem—the camera is not bothered by that,” says Weast. “Maybe the windshield has mud on it or something, and the camera can’t see for a moment. We still have the radar and lidar that can provide information so we can help keep the passengers safe.”

These combined sensor technologies don’t become fatigued or distracted the way humans do and can accurately perceive objects over great distances, even in low light or inclement weather. They provide “a 360-degree view of the landscape that exceeds the capabilities of a human,” says Sumit Sadana, chief business officer for semiconductor producer Micron Technology.There could be possibilities for occasional misinterpretation. A recent study describes “adversarial patches,” or images that can be used to fool the AI in autonomous vehicles into thinking it sees a stop sign, for example. But, Weast says, “these kinds of academic visual tricks are fun in academic circles but rarely affect a real system designed by companies that know better.”The thinking vehicle“Planning,” in the sensing-planning-acting triad, is where safety truly comes into play—it’s where decisions are made in response to what’s happening on the road.

It’s also where the programming in autonomous vehicles manifests itself. But there are deeper philosophical and responsibility questions here: who decides how these vehicles make their own decisions? Who sets the safety standards? Intel’s Responsibility-Sensitive Safety (RSS) framework is one example of the industry working to determine what it means for an autonomous vehicle to drive safely. As a proposed industry standard, it is technology- neutral—so it works with technologies from any manufacturer—and it is intended to foster trust between people and autonomous vehicles. RSS is designed to help people understand what autonomous vehicles do when presented with an unsafe situation. With the RSS framework, Intel intends to ultimately “democratize safety,” or help ensure that safety is the responsibility of all industry participants.“Today there is no regulatory framework that would allow anyone to commercially deploy an automated vehicle. The vehicles on the road today are there for development and test purposes only,” says Weast.

“The challenge that we as an industry face is, ‘How can we prove that these vehicles are ready for the road?’”The RSS framework models what most humans do when they are driving, riding a bicycle, or walking. Once an autonomous vehicle has determined its location and the relative whereabouts of nearby pedestrian and vehicular traffic, the “acting” component is driven primarily by existing automotive technology like the power and braking systems, albeit controlled by the vehicle’s onboard processing. The vehicle’s goal, Weast says, is to drive carefully enough to make its own safe decisions.

“It should never do anything that could initiate an accident or introduce risk to others. And it should be cautious while being able to make reasonable assumptions about the behavior of others.” The potential remains, however, for certain combinations of factors to cause confusion. “Think of what foggy conditions do to a human driver. [That would require] an autonomous software system to selectively filter out that input,” says Fred Bower, distinguished engineer at the Lenovo Data Center Group. Those conditions could be exacerbated by situations that human drivers encounter and deal with on a daily basis.

“Accident scenes, construction zones, malfunctioning signals, and a myriad of other situations that are routine to an experienced human driver are a real challenge for the current autonomous fleet,” says Bower.Industry alignmentIndustry participants are joining forces to resolve lingering technological, legal, and ethical issues related to autonomous-vehicle operations, but it’s a work in progress. Gill Pratt, CEO of the recently formed Toyota Research Institute, believes autonomous-vehicle operations will expand in the near future but realizes the industry has a ways to go. “None of us in the automobile or IT industries are close to achieving true level 5 autonomy—not even close,” he says, referring to the highest of drivingautomation levels, which requires no human intervention. Lower levels, such as 1 and 2, require human control.

Pratt believes that within a decade auto manufacturers will have level 4 vehicles—which run without the need for human input—operating in specific situations.But progress can’t take place in the automotive industry alone. To make autonomous driving a reality and ensure continued and consistent safe operational oversight, the auto industry, govern-ment regulatory agencies, and others must all weigh in and participate to help ensure the safety of autonomous-vehicle riders, other vehicles, and pedestrians. Only then will the technology gain widespread public and commercial acceptance.

“Let’s have a common understanding and hear from consumers,” says Weast. “Let’s hear from governments and let’s understand what do they need to be convinced of the safety of these vehicles.” Genevieve Bell, distinguished professor of engineering and computer science at the Australian National University and a senior fellow at Intel, framed the challenge of incorporating autonomous systems into society as a series of unanswered questions.

“Who gets to decide what’s autonomous and what isn’t? Who gets to decide how that’s regulated? How it’s secured? What its network implications are?” Bell probed during a presentation in San Francisco in October 2018. “Those are not just questions for computer scientists and engineers. Those are questions for philosophers and people in the humanities and people who make laws."